Exploring in Extremely Dark: Low-Light Video Enhancement with Real Events

TL;DR: This paper proposes the Real-Event Embedded Network (REN) for low-light video enhancement using real events to restore details in extremely dark areas. It introduces the Event-Image Fusion module and unsupervised losses for semi-supervised training on unpaired data. Experiments demonstrate superiority over state-of-the-art methods.

Abstract

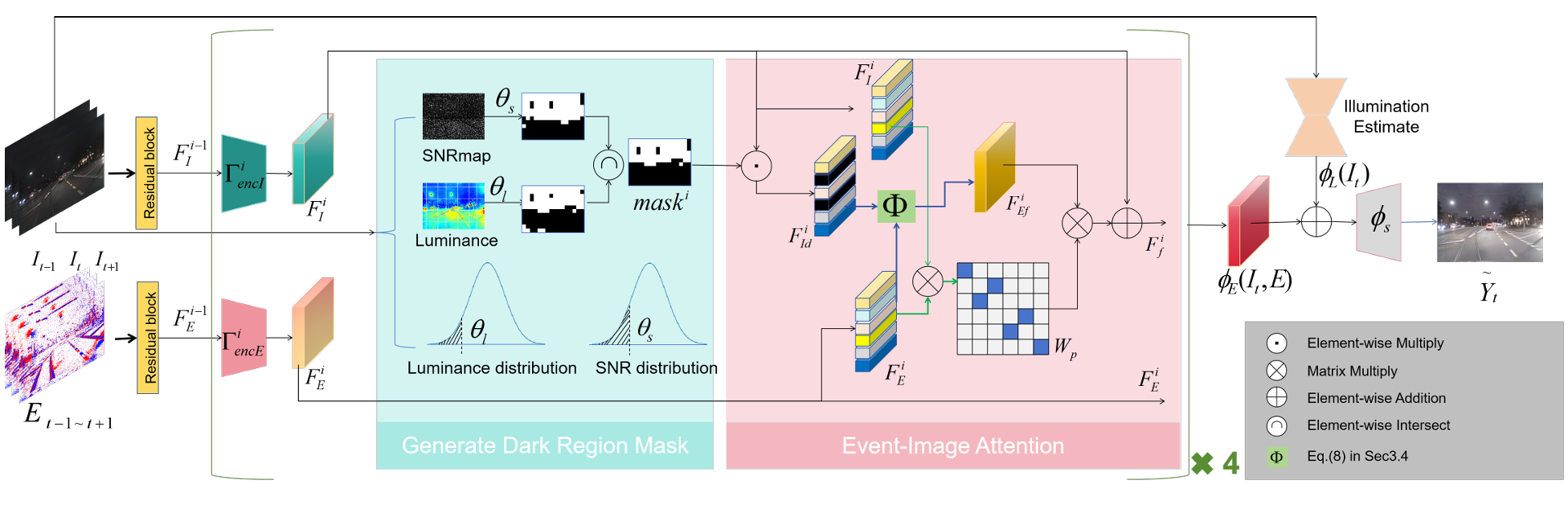

Due to the limitations of sensor, traditional cameras struggle to capture details within extremely dark areas of videos. The absence of such details can significantly impact the effectiveness of low-light video enhancement. In contrast, event cameras offer a visual representation with higher dynamic range, facilitating the capture of motion information even in exceptionally dark conditions. Motivated by this advantage, we propose the Real-Event Embedded Network for low-light video enhancement. To better utilize events for enhancing extremely dark regions, we propose an Event-Image Fusion module, which can identify these dark regions and enhance them significantly. To ensure temporal stability of the video and restore details within extremely dark areas, we design unsupervised temporal consistency loss and detail contrast loss. Alongside the supervised loss, these loss functions collectively contribute to the semi-supervised training of the network on unpaired real data. Experimental results on synthetic and real data demonstrate the superiority of the proposed method compared to the state-of-the-art methods.

Network Architecture

@inproceedings{wangExploringExtremelyMM2024,

author = {Wang, Xicong and Fu, Huiyuan and Wang, Jiaxuan and Wang, Xin and Zhang, Heng and Ma, Huadong},

title = {Exploring in Extremely Dark: Low-Light Video Enhancement with Real Events},

year = {2024},

booktitle = {Proceedings of the 32nd ACM International Conference on Multimedia},

}